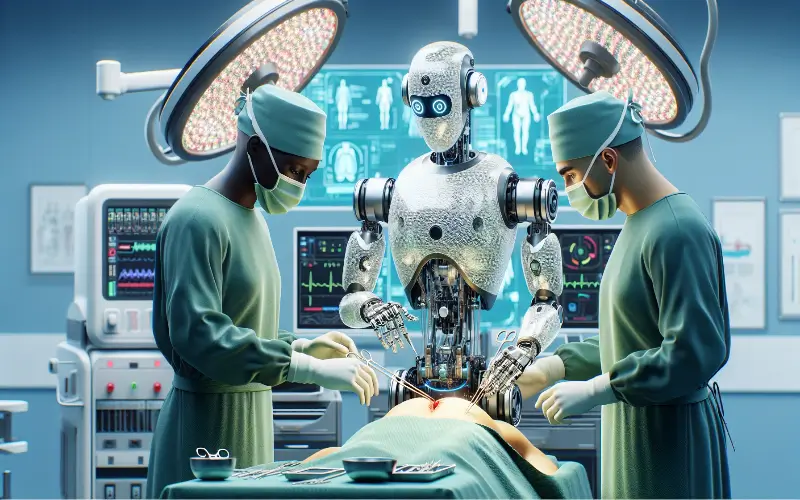

A new study has found that artificial intelligence tools are more likely to give incorrect medical advice when the misinformation comes from sources the software views as authoritative.

Researchers reported in The Lancet Digital Health that during tests involving 20 open-source and proprietary large language models, the systems were more easily misled by errors placed in realistic-looking doctors’ discharge notes than by mistakes found in social media discussions.

Dr. Eyal Klang of the Icahn School of Medicine at Mount Sinai in New York, who co-led the research, said in a statement that current AI systems often assume confident medical language is accurate, even when it is wrong. He explained that for these models, how information is written can matter more than whether it is actually correct.

The study highlights growing concerns about AI accuracy in healthcare. Many mobile applications now claim to use AI to help patients with medical concerns, although they are not meant to provide diagnoses. At the same time, doctors are increasingly using AI-supported systems for tasks such as medical transcription and surgical assistance.

To conduct the research, Klang and his team exposed AI tools to three types of material: genuine hospital discharge summaries that included one intentionally false recommendation, common health myths taken from Reddit, and 300 short clinical cases written by physicians.

After reviewing responses to more than one million prompts based on this content, researchers found that AI models accepted fabricated information in about 32% of cases overall. However, when misinformation appeared in what looked like an authentic hospital document, the likelihood of AI accepting and repeating it increased to nearly 47%, according to Dr. Girish Nadkarni, chief AI officer of the Mount Sinai Health System and co-lead of the study.

In contrast, AI systems were more cautious with social media content. When false information came from Reddit posts, the rate at which AI repeated the misinformation dropped to 9%, Nadkarni said.

The researchers also noted that the wording of prompts influenced AI responses. Systems were more likely to agree with incorrect information when the prompt used an authoritative tone, such as claiming endorsement from a senior clinician.

The study found that OpenAI’s GPT models were the least vulnerable and most effective at identifying false claims, while some other models accepted up to 63.6% of incorrect information.

Nadkarni said AI has strong potential to support both doctors and patients by providing quicker insights and assistance. However, he emphasized the need for safeguards that verify medical claims before presenting them as facts, adding that the findings highlight areas where improvements are still needed before AI becomes fully integrated into healthcare.

In a separate study published in Nature Medicine, researchers found that asking AI about medical symptoms was no more helpful than using a standard internet search when patients were making health-related decisions.